Sometimes I fell, using LPG parsers is like balancing between the fine line of total madness and fine, easy results. The results are (often) fine, as the generator produces a good enough parser, with error recovery mechanisms and similar (I don’t want to compare it with other generators), but sometimes the lack of documentation can be prohibiting.

A problem I wanted to use documentation comments and multiline comments in a Java-like syntax (so /* comment */ describes a comment, while /** comment */ describes a documentation comment). I tried to find either examples or documentation about the solution to this problem, but couldn’t find any (at least until recently).

The solution consists of two parts: the lexer should be capable of differentiating between the two comment types (it should be able to emit the corresponding tokens), and then either the parser or the resolver should be able to read the comments.

Lexing issues

The first problem is hard, as we have to create the rules describing the comment tokens in a constructive way: there is no inversion, and it is better if the two rules are non-conflicting. The basic solution works like the following: a multiline comment starts with two characters, '/' and '*', followed by a not-star character, then followed as a series of character series not consisting of the ending '*''/' tag.

So far it sounds easy, but the grammar language of the LPG generator does not support inverse. Luckily we have a finite alphabet, so the number of two character long series are also finite, which means, it is possible to write the previously mentioned system in a constructive way:

doc ::= '/' '*' '*' CommentBody Stars '/'

mlc ::= '/' '*' NotStar CommentBody Stars '/'

CommentBody$$CommentBody ::= CommentBody Stars NotSlashOrStar |

CommentBody '/' | CommentBody NotSlashOrStar |

Stars NotSlashOrStar | '/' | NotSlashOrStar

NotSlashOrStar ::= letter | digit | whiteChar | AfterASCII |

'+' | '-' | '(' | ')' | '"' | '!' | '@' | '`' | '~' | '.' |

'%' | '&' | '^' | ':' | ';' | "'" | '\' | '|' | '{' | '}' |

'[' | ']' | '?' | ',' | '<' | '>' | '=' | '#' | '$' | '_'

Yes, quite hard to write, and even harder to understand first, but at least it is working.

Parsing the comments

After the lexer understands the comments, the parser needs also to be aware of them. A (seemingly) easy way to handle this is the following: the multiline comment is exported in the lexer as a comment token, while the documentation comment is exported as a simple token. After this the parser could include references to documentation comments and handled similary to all other tokens.

On the other hand, the approach has a serious drawback: the documentation comment is not a comment, so it cannot be placed anywhere, as it is limited by the grammar language. At first this looks not a too big problem, but there could be several problems: first, if you are enhancing an existing language, this could break some texts – possibly created by other users – and the default error message is hard to understand. The second problem is the following: the system disallows having long lines of stars at the beginning of the simple multiline comments, as in the following snippet:

/*********************

* *

* Comment in a box *

*********************/

Why: the comments are not started with two or more star characters, so this block is interpreted as a block comment.

If these problems cannot be ignored, both the comments and documentation comments shall be marked as comment tokens, that are swallowed by the lexer, the parser does see any comment tokens (as it should be). The drawback of this approach is that the position of the documentation comments cannot be controlled in the parser – so the handling has to ignore the documentation comments at invalid locations.

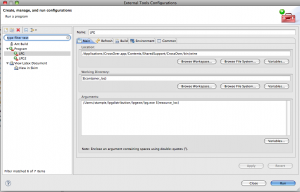

A final step is needed to read these documentation comments: they have to be read, but they are not present in the AST – at least directly. On the other hand, following the tip from the presentation the EclipseCon 2009 Tutorial: “PIMP Your Eclipse: Building an IDE using IMP” preceding adjuncts of an AST node can be read. This terminology was not clear for me, and the missing Javadoc was neither helpful, so this tutorial was a great help.

To tell the truth, I am interested in the correct meaning of the definition used in LPG for adjuncts, but as much I know, the comment tokens are included in it – in their raw form. This raw form means, every character, including whitespaces, starting and ending characters are included, so they might need another parsing step with a different grammar.

But for a short handling, the following code can be used to read the first documentation comment before an element:

public String parseDocComments(ASTNode node) {

IToken[] adjuncts = node.getPrecedingAdjuncts();

for (IToken adjunct : adjuncts) {

String adjString = adjunct.toString();

if (adjString.startsWith("/**")) {

return adjString;

}

}

return null;

}

Conclusion

To allow the use of documentation comments can be quite a bit of challange, especially getting the syntax right, without any conflict between rules, but it is certainly possible.

On the other hand, the language of documentation comments has to be defined again, where even the lexer could not be reused from the original grammar, as it uses different terminal rules (e.g. the documentation comment shall not be a comment token in this language). Even worse, having two different grammars makes it harder to provide correct coding help from the IDE (e.g. content assist, source coloring, etc.). These ways need further experimenting with the tools, but at least the solution is working right now.